Gesture recognition is a movement to understand and explain the gestures of the hands and arms. It is a complex technical field where science and engineering meet. It allows users to interact with electronic devices in a simple, natural and convenient way without wearing any auxiliary devices. Currently, it is mainly used in human-computer interaction research and development, human-machine interface design, because it is used in human-computer interaction applications. The potential of continuous display has gradually attracted people's attention in recent years.

It is not difficult to find that gesture recognition and micro-motion technology will be the development trend of human-computer interaction in the future. The micro-motion adopts multi-angle imaging depth technology. The principle is to compare the images or video streams obtained by fixed cameras at different angles, and calculate the distance from the target object to the center of the camera according to the angular deviation of the camera and the difference between the images. . In this regard, as a deep-seated interactive technology in the field of machine vision, pattern recognition, and embedded systems, there is a unique accomplishment in the research and application of gesture recognition technology.

Zhang Shuo, co-founder of Fengshi Interactive Technology, said that the common human-computer interaction is speech recognition. In fact, there is also the technique of gesture recognition. It is classified into a somatosensory technology, mainly relying on the substantial improvement of subsequent precision, like the BMW 7 Series. And the Audi V8 in the second half of this year, used gesture recognition in terms of interaction. Gestures, like voice, are just an interactive way, and they express the desire to break through the gap between voice and body, because the sense of body may be affected by light, and the voice may have noise, including the influence of the environment inside the car. The combination of technology can produce new ways of interaction and a closer approach to nature. This is the goal of the future.

Three levels to see the micro-motion gesture recognition

Feng Shi Interactive's micro-motion gesture recognition products and solutions use vision-based gesture recognition technology, without the need for users to wear any auxiliary equipment. The hand image information is collected by two custom optical path cameras built in the product, and the algorithm can determine different posture information of the hand and the three-dimensional position information of different fingers and their motion trajectories, and then corresponding to the corresponding control commands. . There are two main features:

First, the hardware level. With independent graphics and image processing chip, the core algorithm does not depend on the hardware implementation of the system;

Second, the software level. A middleware architecture that connects hardware devices and software applications to provide a standard data interface for gesture recognition applications developed on a variety of hardware platforms.

Therefore, the micro-motion can support a variety of hardware platforms and operating systems, with great flexibility and scalability.

In order to meet the needs of customers in special industries, the micro-motion gesture recognition solution also includes customized services to allow gesture interaction to penetrate into more fields. The jog gesture recognition solution consists of three layers: sensor module, middleware, and application.

Sensor module: It is a general term for camera sensor and graphic image processor, which is used to collect hand image data and perform high-concurrency graphic image algorithm processing;

Middleware: Responsible for shielding the differences between different systems. The current middleware can run on Windows\Win CE\Android\Qnx\Linux\ other types of embedded systems, and add advanced action instruction algorithms to the middleware to complete the action. Command picking, motion track detection and other functions;

Applications: VR games, automotive electronics, medical and other business process logic.

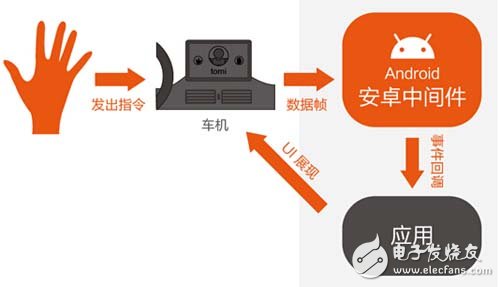

The sensor module performs high-concurrency graphic image processing by collecting hand image data, and transmits the data information to the middleware in units of frames. At the middleware level, the user can select different hardware implementations and different types of operating systems according to requirements. According to the actual gesture requirements, the gestures that are irrelevant to the system can be ignored, and the data driving and event-driven programming methods for the application logic can be realized through the middleware. When the application gets the event from the middleware, it should immediately give the user feedback and execute the corresponding business logic on the UI. The logical relationship between the three levels is shown in the following figure:

The effect of the solution is that it needs to be measured by the actual application in order to allow users to easily and efficiently apply natural gesture recognition interactions to their existing virtual reality games, in-vehicle electronics or consumer electronics.

Enhance products to enhance the car interactive experience

Firstly, the micro-motion gesture recognition solution is included in the user's in-vehicle information system. Considering that the car-mounted electronic adopts the Android Android system, the Android version of the middleware is connected to the gesture recognition sensor module and the client application, including the micro-motion gesture recognition solution. Post user system architecture. As shown below:

Second, the designer matches the action instructions in the existing gesture library in the inching gesture recognition solution with the user application functions.

Finally, by communicating with the software engineer, the middleware uses the event callback to trigger the application's functionality. That is, when the user makes an action instruction that matches the action design, the middleware calls the existing function program logic to complete the operation.

The jog gesture recognition solution has a complete action instruction level, middleware can be cross-platform and provides a high-level API interface, allowing the user's software engineer to easily and quickly complete the docking of existing application functions and gesture interaction. In the overall development process, the architecture of the gesture recognition solution is completely contained in the system system, but it is not tightly coupled and maintains relative independence.

In addition to meeting the basic needs of mobile location, people are beginning to pursue a more comfortable driving experience and more entertainment experiences, such as in-car air conditioning, navigation, car phone, music system. The gesture control technology introduced by the micro-motion allows the driver to sit in the car and adjust and control the information in the car with simple hand and arm movements.

In the field of gesture recognition, hand feature data can be collected by data gloves or cameras, and the collected data is processed by an algorithm and converted into input information of an application software or a hardware device. The goal of the fretting gesture recognition solution is to help users quickly and efficiently develop gesture recognition applications based on visual capture, provide standard gesture recognition sensor modules and gesture action design, interface UI design, commissioned development and other services to reduce application development. Cycles and costs allow users to focus more on the development of their business processes.

MAIN NEW ENERGY CO.,LTD , https://www.greensaver-battery.com