The monitoring and monitoring of the crowd has become an important area at present. Both the government and the security sector have begun to seek a more advanced approach to intelligently monitoring people in public places, thereby avoiding the detection of any anomalous activity before it is too late to take action. But there are still some obstacles that need to be overcome before this can be effectively achieved. For example, if it is necessary to simultaneously monitor all possible crowd activity throughout the city 24 hours a day, it is not possible to rely solely on full manual monitoring, especially if thousands of CCTV cameras are installed.

The solution to this problem is to develop a new smart camera or vision system that automatically monitors the activity of the crowd with advanced video analytics to immediately report any anomalies to the central control station.

Designing this smart camera/vision system requires not only standard imaging sensors and optical equipment, but also a high performance video processor to perform video analysis. The reason for using this powerful onboard video processor is that advanced video analytics technology has high processing requirements, and most of these technologies typically use computationally intensive video processing algorithms.

FPGAs are well suited for applications with such high performance requirements. The UltraFast design methodology, enabled by the High Level Synthesis (HLS) feature in the Xilinx Vivado Design Suite, now makes it easy to create the ideal high-performance design for your FPGA. In addition, the integration of embedded processors such as Xilinx MicroBlaze with FPGA reconfigurable logic allows users to now easily port applications with complex control flows to FPGAs.

With this in mind, we used Vivado HLS, the Xilinx Embedded Development Kit (EDK), and the software-based EDA tools in the ISE Design Suite to design a prototype for crowd motion classification and monitoring systems. This design approach is based on what we consider software control and hardware acceleration architecture. Our design uses a low-cost Xilinx Spartan-6 LX45 FPGA. We completed the overall system design in a short period of time, showing promising results in terms of real-time performance, low cost and high flexibility.

system design

The overall system design is completed in two phases. The first stage is to develop a crowd motion classification algorithm. After the verification of this algorithm is completed, the next step is to implement it into the FPGA. In the second phase of development, we focused on the architectural design aspects of FPGA-based real-time video processing applications. The specific work includes developing real-time video pipelines, developing hardware accelerators, and finally integrating the two into algorithm control and data flow to complete the system design.

The following describes each development phase, starting with a brief introduction to algorithm design and then detailing how to implement the algorithm on the FPGA platform.

algorithm design

In terms of crowd monitoring and monitoring, various algorithms are proposed in the literature. Most of these algorithms detect (or lay out) feature points from crowd scenes.

Start, then track these feature points over time and collect motion statistics. These motion statistics are then projected onto some previously pre-calculated motion models to predict any anomalous activity. Further improvements include gathering feature points and tracking these clusters rather than individual feature points.

The crowd motion classification algorithm in this paper is based on the same concept, except that we prefer to use the template matching method for motion estimation instead of the traditional methods such as Kanade-Lucas-Tomasi (KLT) feature tracker. The template matching method has been verified to increase the amount of computation to significantly improve motion estimation with low contrast or constant contrast.

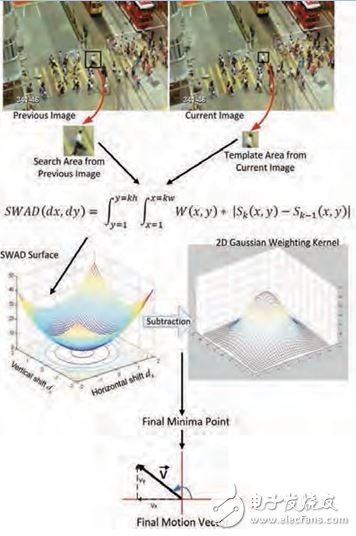

To apply this method to motion estimation, we divide the video frame into a grid of smaller rectangular patches and then use the weighted absolute difference sum (SWAD) method for the current and previous images of each tile. Perform motion calculations. Each patch provides a motion vector corresponding to the range and direction of motion between the two frames at that particular location. The result is that more than 900 motion vectors need to be calculated across the image. The specific steps involved in calculating these motion vectors are shown in Figure 1.

Figure 1: The steps to calculate the motion vector, starting with image acquisition (top)

In addition, we use a weighted Gaussian kernel to achieve the reliability of the occlusion zone and zero contrast zone in the image. Moreover, one tile processing for calculating a motion vector is independent of the processing of other tiles, so this method is well suited for parallel implementations on FPGAs.

After calculating the motion vectors on the complete image, the algorithm then calculates their statistical properties. These attributes include the average motion vector length, the number of motion vectors, the dominant direction of motion, and similar metrics.

In addition, we also calculated the 360-degree histogram of the motion vector direction, and further analyzed its properties such as standard deviation, mean deviation and deviation coefficient. These statistical properties are then projected onto a pre-calculated motion model to classify the current motion into one of several major categories. We then use multiple frames to interpret these statistical properties to confirm the classification results.

The pre-calculated motion model is constructed in the form of a weighted decision tree classifier that takes full account of these statistical properties to classify the observed motion. For example, if motion is observed to be fast and there is a sudden change in momentum in the scene, and the direction of motion is random or beyond the plane of the image, it can be classified as a possible panic. The development of the algorithm was done using Microsoft Visual C++ with the OpenCV library. For a full demonstration of the algorithm, see the web link provided at the end of this article.

Perfect for home decor, wall art, store front design & party decorations. Add something special to the atmosphere for events such as birthday, Christmas, Valentine`s day, proposals, dinner parties, performances, weddings or any party. Just looking at the sign's bright lights can inspire and uplift your mood.

Led Neon Signs are a safer, more affordable alternative to traditional glass neon tube. They're hard to break, cool to the touch, and use less electricity. They also weigh a lot less which makes them portable and easier to use as home and bedroom decor.

RGB neon sign, colorful neon sign, colorful neon light, rgb neon strip, rgb neon light, changeable neon light

Shenzhen Oleda Technology Co.,Ltd , https://www.baiyangsign.com