Ron Wilson

10 Gbps Ethernet (10GbE) has become the standard way to connect server cards to a top-of-rack (ToR) switch in a data center rack. What role can it play in the architectural plan of the next-generation embedded system? This is the story of two separate but interconnected worlds.

Inside the data center

If you can choose the home for the technology, then the 10GbE home will be in the data center cabinet. Here, the standard provides a bridge to bridge the complex architectural gap.

The success or failure of data centers depends on multiple processing capabilities. It needs to divide huge tasks into hundreds or even thousands of server cards and storage devices. At the same time, multiprocessing power in turn determines the success or failure of communications. At this point, it needs to be able to efficiently move data across a huge combination of CPUs, DRAM arrays, solid-state drives (SSDs), and disks, just as if they were large. Shared memory, multi-core systems.

This demand puts tremendous pressure on the interconnected structure. Obviously, it must provide excellent bandwidth with minimal delay. At the same time, since the interconnect will involve almost all servers and storage controllers in the data center, this architecture must be economical (requiring the use of commercial CMOS chips), compactness, and energy savings.

At the same time, the Internet must be able to support a wide range of services. The data block must be able to move randomly between the DRAM array, SSD, and disk. Traffic must be able to travel between the server and the Internet. Remote Direct Memory Access (RDMA) must be able to support the server to treat the memory of other servers as local memory. Some tasks may wish to stream data through hardware accelerators without the use of DRAM or cache on the server card. As data centers deploy Network Function Virtualization (NFV) technology, applications will likely begin to experience data transfer speeds that are only available in hardwired devices.

However, there are some realistic restrictions on these requirements. Speed ​​and short delays require spending money. For 50,000 server cards in a warehouse as large as a warehouse, the technologies available on the lab bench may not be practical. You need to make trade-offs between speed and distance. The speed you achieve at a distance of five meters may not be achieved across hundreds of meters. In general, double-stranded copper is cheaper than fiber. Finally, flexibility is very important, and no one wants to replace the data center network from scratch to support new applications.

When these requirements and constraints are considered together, data center architects often come to similar conclusions (Figure 1). They use twisted pair to connect all server, storage, and accelerator cards in the rack to the ToR switch through 10GbE. Then connect all ToR switches in the data center over a longer distance optical Ethernet fabric. The Ethernet protocol supports the use of commercial interface hardware and a powerful software stack, while laying a solid foundation for adding more professional services such as streaming and security.

Figure 1 A typical data center before upgrading to a faster network, interconnected in a server rack using 10GbE.

Currently, rack links have evolved from 10 Gbps to 25 or 40 Gbps. However, the 10GbE infrastructure has been widely deployed, which is relatively inexpensive and has been field-proven and ready for new uses.

Embedded evolution

Just as 10GbE solidifies its role in server racks, another very different vector of change is rapidly evolving in the embedded world. This change has occurred in systems that rely on video, including broadcast production facilities to machine vision systems. The main reason for this change is the increasing bit rate of the camera's original video signal.

Broadcast may be the first area of ​​application that feels stressed. 1080p video requires almost 3 Gbps of bandwidth. The industry addresses this need with its application-specific Serial Digital Interface (SDI). However, as production facilities and inputs evolve to become more and more similar to data centers, the pressure to transmit multiple video streams over standard network infrastructure begins to increase. 10GbE is a natural choice. Moving from 1080p to 4K HD will only accelerate this trend.

However, video cameras are also used for machine vision. Some applications can accept video at a low scan rate. But in many cases, higher resolution, frame rate, dynamic range, and color depth in HD can achieve higher performance for the entire system. Then, how to transmit video?

For systems that only need edge extraction or simple object recognition, as well as monitoring, etc., since most of the data is immediately discarded, handling the vision locally at the camera is an obvious solution. With relatively simple hardware such as local processing, the required bandwidth between the camera and the rest of the system can be drastically reduced and can be processed using a conventional embedded or industrial bus. In many other cases, the camera's native video compression feature can significantly reduce bandwidth requirements without affecting application performance.

But not every case can be so. Radio production studios are not willing to lose any data. If they want to use compression, they want to use lossless compression. Motion control algorithms may require pixel-level or even lower resolution edge position data, which requires uncompressed data. At the same time, the preferred convolutional neural network for the current leading design may rely on pixel-level data that is completely opaque to the designer. Therefore, you may not have any choice but to transfer all camera data.

Even when compression is feasible, a module containing multiple imaging devices can easily consume more than 1 Gbps of bandwidth by merely sending pre-processed image data, including multiple cameras and a lidar in a driverless car.

If you plan to connect high-bandwidth devices to your system via Ethernet, breaking this 1 Gbps boundary is a problem. Once the cumulative capacity of the 1 Gbps Ethernet link is exceeded, the next step is not 2, but 10 . As a result, the importance of 10GbE continues to increase. However, even considering its economies of scale and the ability to use twisted pair or backbone connections, moving to 10GbE links also means that more expensive chips and controller boards are needed. This is not a small migration.

Get Backbone

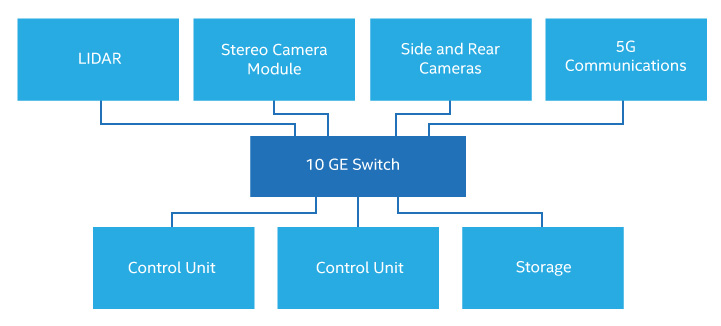

In many systems, 10GbE not only handles the fastest I/O traffic in a design, but it also handles all fast system I/O (Figure 2). Its simplicity, reliability, cost, and weight can perfectly offset the interface's cost and power requirements. For example, connecting all major modules in a driverless car through a single 10GbE network will eliminate multiple meters and save several kilometers of wiring. These modules include cameras, lidars, positioning, chassis/transmission systems, security, communications and electronic control devices. The adoption of a unified approach is a huge advantage over the ever-increasing chaos of dedicated high-speed connections that typically require manual wiring harness installations.

Figure 2 In an embedded system, 10GbE provides a single backbone interconnect for a variety of high-bandwidth peripherals.

However, harmonizing system interconnects to native Ethernet can also cause problems. Ironically, the first challenge 10GbE faces is bandwidth. A machine vision algorithm that handles the raw output of two HD video cameras will consume as much as half of the 10GbE backbone bandwidth. Therefore, it is difficult to make choices for systems with data traffic exceeding several Gbps. You can use multiple 10GbE connections as point-to-point links. Alternatively, if the algorithm tolerates delays, you can use local compression or data analysis at the source to reduce bandwidth requirements, such as visual processing between the camera and the control device.

Another problem is cost. For a $250 10GbE interface or even a $50 chip, a smaller low bandwidth sensor may not be an ideal candidate. You may want to integrate some of these devices into a single concentrator or simply provide them with a separate, low-bandwidth industrial bus.

Time is everything

We abstractly provide a very promising scenario. The data center built a large 10GbE chip, media, and board infrastructure. Now, this huge computing facility will be moved to 25 or 40GbE, all of which are looking for new markets. At the same time, the data rates of some embedded systems have quickly exceeded the boundaries of commonly used 1GbE links, bringing opportunities that 10GbE vendors are looking for.

But that is not the case. Specifically, the real embedded world will care about delays and other quality of service parameters. If the data center ToR switch frequently experiences unexpected delays, the worst results will also result in longer execution times and higher costs for the workload, but the workload here is less time consuming. In the embedded world, if you miss the deadline, you will suffer losses, and it is usually a big, expensive loss.

This is a long-standing problem with embedded system network technology. At the same time, the embedded community has provided a solution for this, namely IEEE 802.1 standard clusters, collectively referred to as time-sensitive networks (TSNs). TSN is a set of new additions and changes to layer 2 and above of the 802.1 standard, allowing Ethernet to provide a guaranteed level of service beyond traditional "best-effort" services.

So far, TSN has launched three elements: 802.1Qbv planned traffic enhancement, 802.1Qbu frame preemption, and 802.1Qca path control and reservation. Each defines a service that is critical to the use of Ethernet in real-time systems.

One of the services is to predefine paths through the network for virtual connections rather than forwarding packets according to the "best effort" setting for each relay. For an embedded system where the entire network is usually a single switch, the use of this facility alone may not be significant.

However, other parts of this specification are closer to actual requirements, requiring bandwidth reservation or stream processing for the connection, and configuration redundancy to ensure delivery. Another service is to pre-set a frame transmission plan for the connection on a network that simultaneously carries priority transmission traffic and "best effort" traffic. At the same time, another element defines a mechanism to preempt transmission of frames to transmit planned or higher priority frames. The combination of these capabilities allows TSN networks to ensure virtual connection bandwidth, create virtual flow connections, or guarantee maximum latency for virtual connection frames.

Since TSN is mainly a cascade on the 802.1 network, TSN through 10GbE is feasible. At a minimum, one vendor has released some 10GbE media access controller (MAC) intellectual property (IP) cores that implement TSN capabilities that will be used with standard physical coding sublayer IP and transceivers. Therefore, the 10GbE TSN backbone can now be implemented using an appropriately priced FPGA or ASIC.

Using 10GbE for system interconnection in embedded design is not a panacea. At the same time, using the TSN extension to meet real-time requirements may not require the same Layer 2 solution currently used in the data center. However, for embedded designs such as driverless cars or vision-based machine controllers that must support high internal data rates, 10GbE used as a point-to-point link or backbone interconnect may be an important alternative.

British Wall Switch And Socket

Wenzhou Niuniu Electric Co., Ltd. , https://www.anmuxisocket.com